(Partially updated in July 2016)

Inspired by a nice presentation at http://de.slideshare.net/oliverhankeln/opentsdb-metrics-for-a-distributed-world, I wanted to set up an OpenTSDB environment on my machine to replace the old munin monitoring, I’m still using and fighting with.

The following guide shall describe the steps for setting up OpenTSDB monitoring on an Ubuntu machine.

A word on disk space

According to the SlideShare presentation, referenced above, any data point consumes less than 3 Bytes on the disk if compressed and less than 40 Bytes, if uncompressed.

With that number in mind, you shall be able to give an estimation, how much data you will gather the next year(s).

Installing HBase

Follow https://hbase.apache.org/book/quickstart.html, download the latest binary (at the time of writing: hbase-1.2.2-bin.tar.gz ) and install it e.g. in /opt:

clorenz@machine:~/Downloads $ cd /opt

clorenz@machine:/opt $ tar -xzvf ~/Downloads/hbase-1.2.2-bin.tar.gz

clorenz@machine:/opt $ ln -s hbase-1.2.2 hbase

Next, edit conf/hbase-site.xml:

<configuration>

<property>

<name>hbase.zookeeper.quorum</name>

<value>127.0.0.1</value>

</property>

<property>

<name>hbase.rootdir</name>

<value>file:///opt/hbase</value>

</property>

<property>

<name>hbase.zookeeper.property.dataDir</name>

<value>/opt/zookeeper</value>

</property>

</configuration>

If you already have a running zookeeper instance, you must instruct OpenTSDB not to start its own zookeeper. For that, please add the following configuration to conf/hbase-site.xml:

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

And in conf/hbase-env.sh set the following line:

# Tell HBase whether it should manage it's own instance of Zookeeper or not.

export HBASE_MANAGES_ZK=false

Now, in any case, regardless of zookeeper, continue and edit conf/hbase-env.sh:

...

export JAVA_HOME=/opt/java8

...

Be careful to ensure, that your local hostname is resolved properly, the best is:

clorenz@machine:/opt/hbase $ ping machine

PING localhost (127.0.0.1) 56(84) bytes of data.

64 bytes from localhost (127.0.0.1): icmp_seq=1 ttl=64 time=0.050 ms

64 bytes from localhost (127.0.0.1): icmp_seq=2 ttl=64 time=0.042 ms

^C

Finally, start hbase by running

clorenz@machine:/opt/hbase $ ./bin/start-hbase.sh

With ps -ef | grep -i hbase you can ensure, that your hbase instance is running properly:

clorenz@machine:/opt/hbase/logs $ ps -ef | grep -i hbase

root 31701 2795 0 14:37 pts/2 00:00:00 bash /opt/hbase-0.98.9-hadoop2/bin/hbase-daemon.sh --config /opt/hbase-0.98.9-hadoop2/bin/../conf internal_start master

root 31715 31701 44 14:37 pts/2 00:00:07 /opt/java7/bin/java -Dproc_master -XX:OnOutOfMemoryError=kill -9 %p -Xmx1000m -XX:+UseConcMarkSweepGC -Dhbase.log.dir=/opt/hbase-0.98.9-hadoop2/bin/../logs -Dhbase.log.file=hbase-root-master-ls023.log -Dhbase.home.dir=/opt/hbase-0.98.9-hadoop2/bin/.. -Dhbase.id.str=root -Dhbase.root.logger=INFO,RFA -Dhbase.security.logger=INFO,RFAS org.apache.hadoop.hbase.master.HMaster start

Congratulations: You’ve finished your first step. Let’s take the next one:

Installing OpenTSDB

At first, download the latest source code from github:

clorenz@machine:/opt/git $ git clone git://github.com/OpenTSDB/opentsdb.git

Klone nach 'opentsdb'...

remote: Counting objects: 5518, done.

remote: Total 5518 (delta 0), reused 0 (delta 0)

Empfange Objekte: 100% (5518/5518), 27.09 MiB | 6.39 MiB/s, done.

Löse Unterschiede auf: 100% (3704/3704), done.

Prüfe Konnektivität... Fertig.

Next, build a debian package:

clorenz@machine:/opt/git/opentsdb (master)$ sh build.sh debian

If you encounter an error (e.g. like ./bootstrap: 17: exec: autoreconf: not found ), it’s likely possible, that you’re missing the prerequisite packages. Be sure to install at least the following ones:

If everything went well with the debian build, you can install it:

clorenz@machine:/opt/git/opentsdb (master)$ sudo dpkg -i build/opentsdb-2.2.1-SNAPSHOT/opentsdb-2.2.1-SNAPSHOT_all.deb

Initial preparings for OpenTSDB

Before you can run OpenTSDB, you have to create the hbase tables:

clorenz@machine:/opt/git/opentsdb (master)$ env COMPRESSION=GZ HBASE_HOME=/opt/hbase ./src/create_table.sh

and at least in the beginning, it is helpful, that OpenTSDB creates the metrics automatically. For that, you have to set the following line in /etc/opentsdb/opentsdb.conf:

tsd.core.auto_create_metrics = true

Starting OpenTSDB

sudo service opentsdb start

When you access http://localhost:4242/ you will see the OpenTSDB GUI.

Now it’s time to start gathering data. We’ll use TCollector for the most basic data:

Installing TCollector

Again, we’re fetching the sourcecode from github:

clorenz@machine:/opt/git $ git clone git://github.com/OpenTSDB/tcollector.git

Let’s configure tcollector, so that it uses our own OpenTSDB instance by adding one single line to /opt/git/tcollector/startstop :

TSD_HOST=localhost

Starting tcollector is pretty easy:

clorenz@machine:/opt/git/tcollector (master *)$ sudo ./startstop start

It’s done!

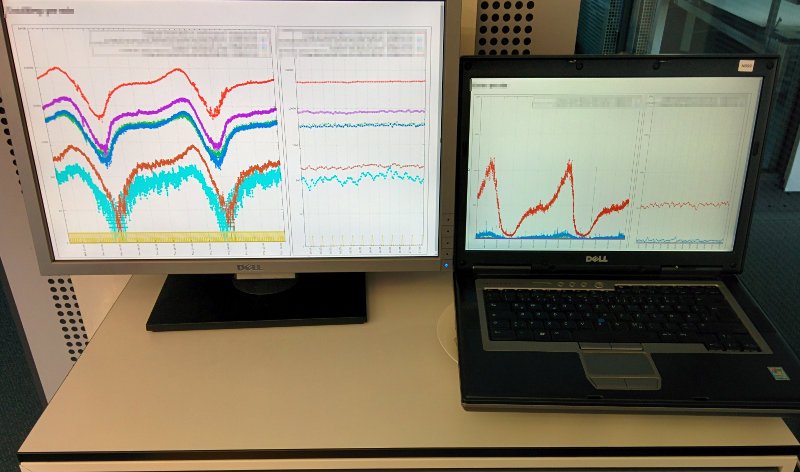

Now, you can access your very first graph in the interface by selecting a timeframe and the metric df.bytes.free. You shall see now a graph!

Writing custom collectors

Any collector writes one or more lines with the following format:

metric timestamp value tag1=data1 tag2=data2

With in the subdirectory collectors of your tcollector installation, there are numerical subdirectories, which denote, how often a collector is executed. A directory name of 0 stands for “runs indefinitely, like a daemon”, values greater zero stand for “runs each n seconds”.

With that in mind, it shall be fairly easy to write own collectors now, like on the following example. Note, that these collectors are not neccessiarly written in Python, but you can basically use any language

#!/usr/bin/python

import os

import sys

import time

import glob

from collectors.lib import utils

def main():

ts = int(time.time())

yesterdayradio = glob.glob('/home/clorenz/data/wav/yesterdayradio-*')

print "wavfiles.total %d %d type=yesterdayradio" %( ts, len(yesterdayradio))

sys.stdout.flush()

if __name__ == "__main__":

sys.stdin.close()

sys.exit(main())

You can test your collector by executing it on the shell:

PYTHONPATH=/opt/tcollector /usr/bin/python /opt/tcollector/collectors/300/mystuff.py

Pretty straightforward, isn’t it?

If you for some reason generated wrong data, you can delete it, but beware, that this command is very dangerous, so the “1h ago” parameter in the following script actually means “now”, since the resolution is about one hour:

/usr/share/opentsdb/bin $ sudo ./tsdb scan --delete 2h-ago 1h-ago sum wavfiles.total type=*

Find more about manipulating the raw collected data at http://opentsdb.net/docs/build/html/user_guide/cli/scan.html

Let’s now polish the whole installation with a nicer frontend to get a real dashboard:

Installing Status Wolf as frontend

Since the standard GUI of OpenTSDB is a little raw, it’s a good idea to install an alternative for it, the one which is currently best looking (not only visually, but also in terms of features, like anomaly detection) is StatusWolf. To install StatusWolf, you need only a few steps:

- install apache2

- install libapache2-mod-php5

- install php5-mysql

- install php5-curl

- ensure, that mod_rewrite is working:

sudo a2enmod rewrite

sudo a2enmod actions

sudo service apache2 restart

- download StatusWolf and install it into /opt

- install pkg-php-tools

- install composer ( https://getcomposer.org/download/ ):

sudo mkdir -p /usr/local/bin

sudo chown clorenz /usr/local/bin

curl -sS https://getcomposer.org/installer | php -- --install-dir=/usr/local/bin --filename=composer

- install mysql-server (remember the root user password for later creation of the database)

- Follow the StatusWolf setup instructions (be sure to remove all comment lines in the JSON configuration!)

- Ensure, that all files belong to the www-data user:

clorenz@machine:/opt $ sudo chown -R www-data StatusWolf

- Create /etc/apache2/sites-available/statuswolf.conf:

Listen 9653

<VirtualHost your.host.name:9653>

ServerRoot /opt/StatusWolf

DocumentRoot /opt/StatusWolf

<Directory /opt/StatusWolf>

Order allow,deny

Allow from all

Options FollowSymLinks

AllowOverride All

Require all granted

</Directory>

</VirtualHost>

- Link this file to /etc/apache2/sites-available

- Ensure, that in /etc/apache2/mods-available/php5.conf, the php_admin_flag is disabled:

# php_admin_flag engine Off

- Create an user in the database (please use different values unless you want to create a security hole!):

mysql statuswolf -u statuswolf -p

INSERT INTO auth VALUES('statuswolf',MD5('statuswolf'),'Statuswolf User');

insert into users values(2,'statuswolf','ROLE_SUPER_USER','mysql');