(or: It’s the inner values, that count)

Remember last time, when your application was all green in your monitoring suite, but you got complaints, because it did not do, what it was expected to? Or have you ever been in the situation, where you wanted to measure what your application does, without going through megabytes of logfiles? Do you need some KPI based monitoring? Don’t want to reinvent the wheel?

For any of these cases, the following monitoring approach, using the standard Java JMX approach together with Jolokia as HTTP bridge, will be perfect!

At first, let’s take a look at JMX, the Java Management Extensions:

JMX is a Java API for ressource management. It is a standard from the early days (JSR 3: JMX API, JSR 160: JMX Remote API), got some overhaul recently (Java 6: Merge of the both APIs into JSR 255 – the JMX API version 1.3) and since Java 7, we have the JMX API version 2.0. Basically, JMX consists of three layers, the Instrumentation Layer (the MBeans), the Agent Layer (the MBean Server) and the Distributed Layer (connectors and management client).

Although you can use JMX for managing virtually everything (even services), we just contentrate here on using JMX for monitoring purposes.The same we do for MBeans.

What are MBeans?

Generally spoken, MBeans are resources (e.g. a configuration, a data container, a module, or even a service) with attributes and operations on them. Everything else, like notifications or dynamic structures are out of scope for us now.

Technically, a MBean is a class, which implements an Interface and uses a naming convention, where the Interface name is the same as the class name plus “MBean” at the end:

class MyClass implements MyClassMBean

Now, let’s create a sample counting MBean:

public interface MyEventCounterMBean {

public long getEventCount();

public void addEventCount();

public void setEventCount(long count);

}

package my.monitoring;

public class MyEventCounter implements MyEventCounterMBean {

public static final String OBJECT_NAME="my.monitoring:type=MyEventCounter";

private long eventCount=0;

@Override

public long getEventCount() {

return eventCount;

}

@Override

public void addEventCount() {

eventCount++;

}

@Override

public void setEventCount(long count) {

this.count = count;

}

}

Before we can use the bean, we have to make it available. For that, we need to wire it with the MBean server. The MBean server acts as a registry for MBeans, where each MBean is registered by its unique object name. Those object names consists of two parts, a Domain and a number of KeyProperties. The Domain can be seen as the package name of the bean, and one of these KeyProperties, the type, is its class name. if you use the “name” property, it denotes one of its attributes.

So, for our example above, the ObjectName would be:

my.monitoring:type=MyEventCounter

In every JVM, there’s at least one standard MBean server, the PlatformMBeanServer, which can be reached via

MBeanServer mbs = ManagementFactory.getPlatformMBeanServer();

In theory, you could use more than one MBean server per JVM, but normally, using only the PlatformMBeanServer is sufficient.

Next step: Accessing MBeans

To access our MBean, we can either use Spring and its magic, or we do it manually.

The manual way looks the following:

We once have to register our bean, e.g. in an init method:

MBeanServer mbs = ManagementFactory.getPlatformMBeanServer();

ObjectName myEventCounterName = new ObjectName(MyEventCounter.OBJECT_NAME);

MyEventCounter myEventCounter = new MyEventCounter();

mbs.registerMBean(myEventCounter, myEventCounterName);

And for every access, we have to retrieve it from the MBeanServer so that we can invoke the methods:

MBeanServer mbs = ManagementFactory.getPlatformMBeanServer();

ObjectName myEventCounterName = new ObjectName(MyEventCounter.OBJECT_NAME);

mbs.invoke(myEventCounterName, "addEventCount", null, null);

Have you seen the second argument of the invoke method? It’s the name of the operation, you want to invoke. If you want to pass arguments, you pass their values as an object array as third, and their signature as fourth string array, e.g.

mbs.invoke(myEventCounterName, "setEventCount", new Object[] {number}, new String[] {int.class.getName()});

If we’re lucky, and our whole application is managed by Spring, it’s sufficcient to work with configuration and annotation only.

The MBean needs to be annotated as @Component and @ManagedResource with the object name as parameter:

@Component

@ManagedResource(objectName="my.monitoring:type=MyEventCounter")

and the attributes need a @ManagedAttribute:

@Override

@ManagedAttribute

public void addEventCount() {

eventCount++;

}

In your spring configuration, besides the <context:component-scan> tag, you need one additional line for exporting the MBeans:

<context:mbean-export>

And those classes, which want to use the bean, just have to import it with the @Autowired annotation:

@Autowired

private MyEventCounterMBean myEventCounterMBean

Accessing MBeans from outside, using Jolokia

Of course, with the jconsole, you can access your MBeans, but a more elegant and more firewall-friendly way is use an HTTP bridge, which allows you to access the MBeans over HTTP. That’s, where Jolokia joins the game.

Jolokia is JMX-JSON-HTTP bridge, which allows access to your MBeans over HTTP and returns their attributes in JSON. Nice, isn’t it? Besides that, it allows bulk requests for improved performance, has got a security layer to restrict access and is really easy to install.

If you want to monitor your webapp, which runs inside tomcat, all you need is, to deploy the Jolokia agent webapp (available as a .war file) into your tomcat.

For a standalone Java application, just apply the Jolokia JVM agent (which in fact acts as an internal HTTP server) as javaagent in your start script:

java -javaagent:$BASE_DIR/libs/jolokia-jvm-1.2.1-agent.jar=port=9999,host=*

And if you build with gradle, apply the following line to your build.gradle:

runtime (group:"org.jolokia", name:"jolokia-jvm", classifier:"agent", version:"1.2.1")

Helpers – jmx4perl

Now, that we can access our MBeans from outside, it would be nice to have a tool available to just read the values on the comand line. The best tool for that is jmx4perl, which is available on github at https://github.com/rhuss/jmx4perl

The installation reminds of the good old Perl days with CPAN. If you’ve never worked with CPAN, just install jmx4perl according to the documentation and ACK all questions.

Now, let’s get an overview of all available MBeans:

jmx4perl http://your.application.host:9999/jolokia list

And if you want a decicated bean, run:

jmx4perl http://your.application.host:9999/jolokia read my.monitoring:type=MyEventCounter

Your output is in JSON and will be like:

{

EventCount => 234,

Name => 'MyEventCounter'

}

And finally, if you just need one attribute, run:

jmx4perl http://your.application.host:9999/jolokia read my.monitoring:type=MyEventCounter EventCount

In that case, you’ll get nothing but the value as a result.

Let’s go!

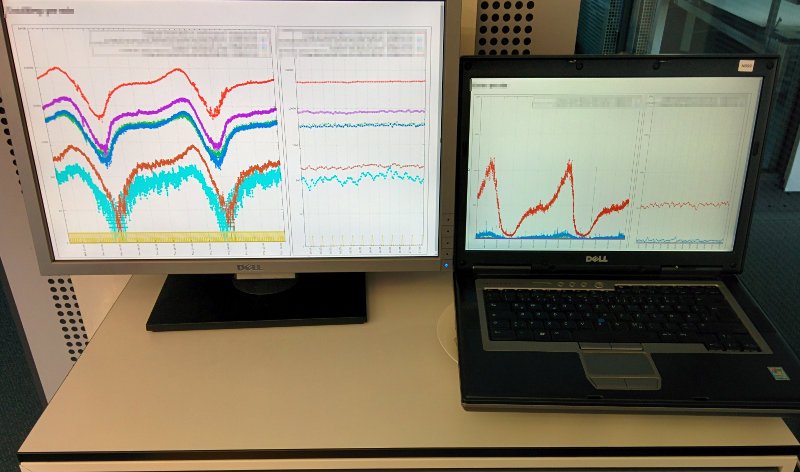

With these tools and figures, you can monitor virtually everything inside your application. All you have to do now is to provide the data (and you, as the developer of your application know, what exactly shall be monitored) and to monitor it with Nagios, OpenTSDB, whatever you want. All these tools are able, either directly, or with helpers like jmx4perl, to access, process and monitor the data.